Why False Positives Are Important

Most forensic examiners are familiar with seeing false positives in their search or processing results. False positives will always be present in tools that conduct some form of data carving in their searching and/or processing.

I often get questions from forensic examiners (both new and experienced) on whether the data that Magnet IEF or Magnet AXIOM has found is valid. Without seeing the data myself, it’s quite difficult to determine the validity of the information so I’ll typically respond with several follow up questions trying to understand what the examiner is seeing. This helps me assess the likelihood of the data being either valid or a false positive.

Most forensics tools either parse out structured data from a file system and present it to an examiner, or search sector by sector carving out files (or data) from unstructured evidence. Many tools do both.

Parsing

Parsing data from an MFT or root directory will have very few false positives because the structure of the file system is usually well defined and there are many checks and balances to ensure that the data being analyzed is represented exactly as expected.

Carving

Carving unstructured data can be a little fuzzier and the likelihood of false positives increases quite a bit. Most tools will carve data based on a set of signatures either through a header, footer, or any other unique identifier, or constant, that signifies that the data belongs to a particular app or artifact – regardless of file names, paths or other file system attributes that typically assist in classifying the data.

And carving data doesn’t necessarily mean deleted data. Deleted data can be parsed from the file system or carved from unallocated space. Quite often we will carve data from allocated files such as pictures from a document or parse out deleted data from unallocated space based on the MFT record. The user’s action of deletion is independent of whether something was carved or not.

An Argument for False Positives

In general, false positives are not bugs in your forensics software, they’re simply matches to the criteria used to carve through a hard drive or mobile phone with potentially several terabytes of unstructured data.

There’s bound to be some questionable matches for your signatures because the combinations of data pieced together are almost infinite. If your tool produces no false positives, I would argue that its carving signatures are not aggressive enough and it is potentially not getting all of the relevant data for an app or artifact.

With IEF and AXIOM, we could restrict our carving for many apps but it would limit your results and potentially miss important evidence. In my investigations, I would rather get 10 false positives than miss one false negative.

Now this is not meant to be an apology for any of your tools (including ours) or give freedom to software to create hundreds or thousands of false positives for your case. We work to minimize the number of false positives recovered when carving data from your evidence, but this can be challenging when the apps and data are constantly changing and can be a moving target. At Magnet Forensics, we will often carve data based on a signature for the file type or artifact and then conduct one or more validations on the data to ensure that it is the artifact in question.

For example, most examiners know that if they find a file header of SCCA (0x53 43 43 41) at offset 4 of a file it is likely a prefetech file in Windows, however, carving for SCCA in unallocated space will also yield quite a few hits for SCCA which may end up being false positives (along with the valid prefetch files you’re looking for). To help minimize false positives it’s great to understand the entire structure of the file in question if/when possible. Tools can check for other unique items like strings, timestamps or other values that can help minimize the number of matches found, especially in unstructured data where you don’t always know where one file ends and another begins.

Even with this check you may still get some false positives but you will get far less than just searching for SCCA. This technique helps for a lot of different file or artifact types but it isn’t always as easy or clear.

If you don’t know the structure of the file or there aren’t any additional checks you can perform, you still may want to carve for that data but the number of false positives is going to be much higher. It’s a tradeoff between making sure you recover all the potential evidence and minimizing the amount of data you’ll need to review after processing.

Otherwise, giving examiners the ability to quickly identify false positives is another thing tools can do to greatly improve results as well.

How to Identify False Positives

The first thing I do when a case is finished processing is take a quick look at all of the artifacts recovered to see if there are any interesting chat programs, searches, browser data, etc. I’ll typically start with the one that has the most hits since it might be assumed that it was used more than some of the others. I like to call this, “the low-hanging fruit.”

When it comes to false positives, you may get all the hits for a given artifact that appear to not be valid data or you may only get one or two messages that were not carved correctly among several other messages of valid information. The ones where all of the hits for that app are invalid are easy to spot and identify as false positive, as the data looks messy and doesn’t make any sense.

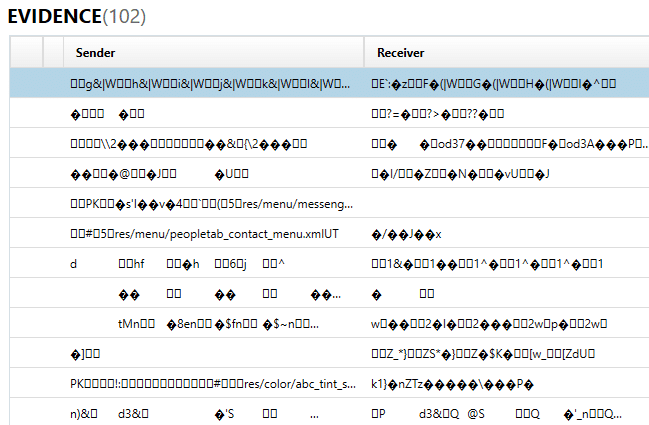

Here’s an example of TextMe messages that are clearly false positives and not relevant to my investigation. These are the easiest to identify and most tools should be able to fix this type of false positive by improving their carving (and, yes, this is one we recently became aware of and we are looking at ways to improve it).

The other type of false positive is simply an invalid hit that was carved among several other valid ones, these are usually outliers and can vary from image to image since it’s not always clear what in the image causes it and it would be very difficult for a tool to automatically eliminate them without the risk of missing valid data.

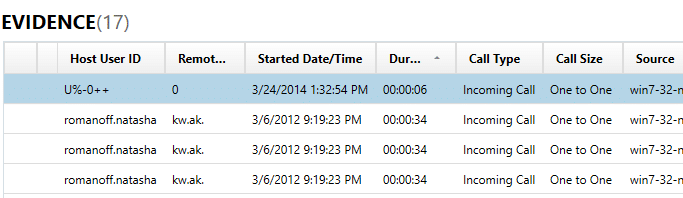

Above is an example of an invalid hit found in Skype calls and it appears that some data lines up, but other information is obviously invalid. The Host User ID is messy and it’s unlikely that’s a real Skype username. Another giveaway? The Remote User ID is 0, which is also unlikely. The Started Date/Time also seems a little questionable but it’s not immediately obvious. For these hits all of the timestamps occur around the same time in March 2012 but the false positive shows March 2014 which after closer analysis doesn’t line up very well with any other data on the device, but that type of insight isn’t obvious without further analysis.

Check Source Data

If you’re not sure if this hit is a false positive after seeing this, I find the best way to check to see if something is a false positive or not is to check the source location where the tool found the artifact. Most tools (including IEF and AXIOM) will tell you exactly where they found the artifact (if the tool doesn’t, it’s time to switch tools, otherwise how else are you expected to verify or validate your findings?).

The source is usually a strong indicator of whether the data recovered is valid or not. If I find chat data in a Windows DLL or similar system file, it’s unlikely to be valid data (aside from potential file slack, so it’s not impossible). Otherwise, if it’s found in a SQLite database known to store chat data or in a file path where other chat data is expected to be stored, you can trust that it’s more likely to be valid data that was carved.

Multiple Tools

Finally, the last way to verify the validity of your recovered evidence is to test with another tool that also recovers the same app or artifact. Every tool that carves data will do so differently as each tool is built differently. These differences can help examiners validate what a given tool found, or the different carving technique can find additional data that wasn’t previously discovered.

Using multiple tools in your toolkit is essential for examiners and should be part of everyone’s standard operating procedures. Checking for false positives and verification of data are natural reasons to use different tools for the benefit of the case.

False positives happen and are often the result of deep dives and searches on data. If you aren’t seeing false positives, you need to make sure your tools are digging deeply enough. Luckily, experience and intuition can help you quickly spot and eliminate the data that is irrelevant.

For AXIOM and IEF customers: If you’re seeing a lot of false positives of a particular app or artifact and you know that app isn’t even installed on the computer or phone, then report it with some example or sample data if possible so that we can improve our carving signatures.

If you have any questions, feel free to reach out.

Jamie

jamie.mcquaid@magnetforensics.com